PARA-Programming

Structured AI-Assisted Development

The Problem

AI coding assistants are powerful, but...

- No memory across sessions

- No audit trail of decisions

- Token costs add up fast

- Work disappears when you close the session

Challenges

How many times have you...

What if your AI assistant had...

Persistent Memory

Complete History

Professional Workflow

Building a Second Brain

"Your mind is for having ideas,

not holding them."

— Tiago Forte

A shared cognitive system between

human and AI—persistent, auditable,

and evolving with each project.

PARA-Programming

A structured methodology for

professional AI-assisted development

The PARA Framework

Projects → context/plans/

Areas → context/context.md

Resources → context/data/

Archives → context/archives/

Each project gets a structured memory system

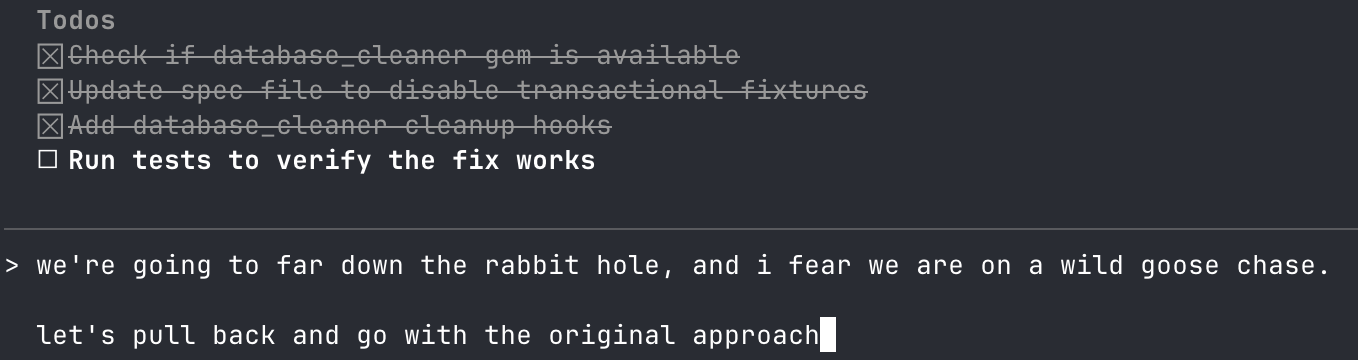

Five-Step Workflow

Every task follows the same process.

Consistent. Auditable. Repeatable.

Pair-Programming with AI

Work with AI as a collaborative partner,

not just a code generator

Plans ensure shared understanding

Reviews maintain human oversight

Summaries capture joint decisions

Roles may flip, but context stays synchronized

How It Works

Plan: Document approach

Review: Human approval

Execute: Work in git branches, commit after every todo

Summary: What changed & why

Archive: Preserve history

Complete Audit Trail

Every decision documented.

Every change traceable.

Six months later, you know exactly why.

Plan: "Why are we doing this?"

Commits: "What changed, step by step?"

Summary: "What did we learn?"

Persistent Context

Resume work across sessions

Friday 5pm: Close laptop

Monday 9am: Pick up exactly where you left off

Context lives in files, not just memory.

Token Efficiency

Load only the context you need

Use fewer tokens by design

Plan-scoped context vs. entire codebase

Reference files on-demand, not upfront

Result: Lower costs, faster responses

Example: Add Authentication

1. Plan: /para-program:plan "add authentication"

→ Creates structured plan document

2. Review: Human validates approach

3. Execute: /para-program:execute

→ Creates branch, tracks todos, commits incrementally

4. Summarize: /para-program:summarize

→ Documents what changed and why

Persistent Memory Layer

context/

├── data/ # Datasets, inputs, API payloads

├── plans/ # What you're planning to do

├── summaries/ # What you did and why

├── archives/ # Historical snapshots

├── servers/ # MCP tool wrappers

└── context.md # Active work state

Files survive session boundaries.

Your work persists.

MCP Integration Strategy

Model Context Protocol for efficient data access

Lazy Loading: Load only necessary tool definitions

Preprocessing: Filter large datasets in code before sending to model

Privacy: Keep sensitive data outside context when possible

context/servers/ holds tool wrappers for on-demand access

Get Started in 5 Minutes

# Install the plugin

/plugin install para-program@brian-lai/para-programming-plugin

# Initialize in your project

/para-program:init

# Start your first task

/para-program:plan "your task here"

Works with Claude Code, Cursor, Copilot, and more.

Why This Works

Mirrors how humans and LLMs process information

Prompt window = Working memory (short-term)

Context directory = Long-term memory

context.md = Prefrontal cortex (what to focus on)

Keep only relevant context active → more accurate reasoning

Backed by Research

Anthropic's MCP research validates this approach

LLMs perform best with minimal, purposeful context

Externalized computation lets models focus on reasoning

Internal benchmarks: up to 98.7% token reduction

while improving accuracy and determinism

Build Professional Software

with AI

github.com/brian-lai/para-programming-plugin

Questions?